Language and AI

Artificial intelligence does not speak, it generates.

In a world disrupted by language models such as ChatGPT, EFL scientifically examines what these AIs say about our own language, our cognitive structures… and their limitations. Language becomes both an object of analysis and a technical tool, at the crossroads of the humanities and computer science.

Linking language and computers

Between segmentation and simulation, artificial intelligence attempts to reproduce the complexity of human language. But can it grasp its intention, variation, and contextual depth?

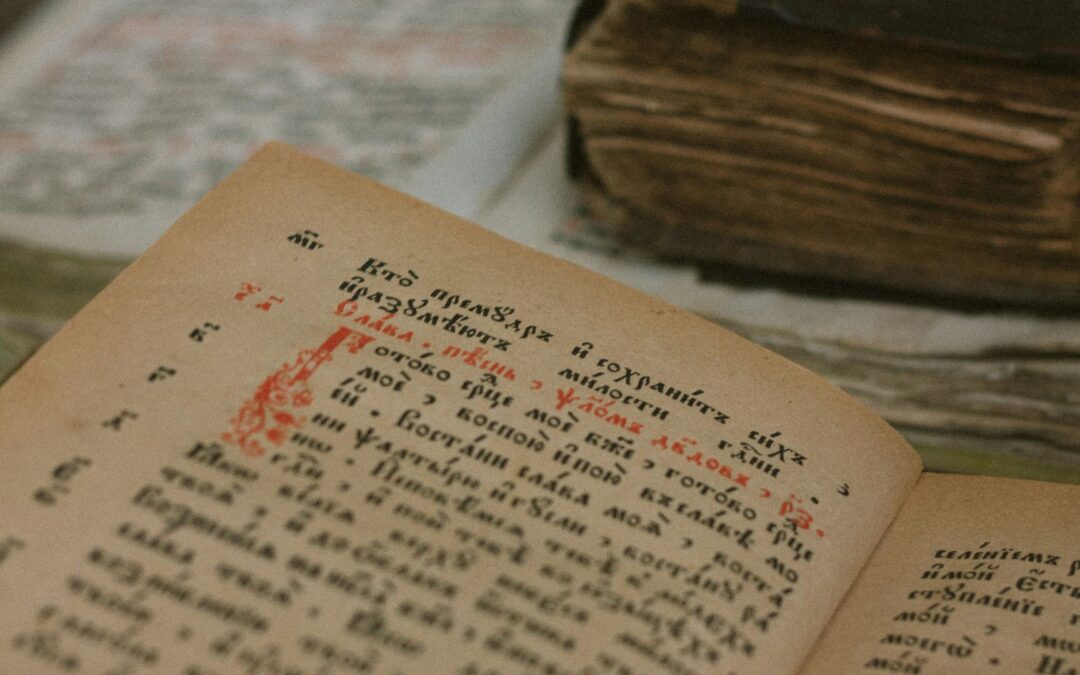

© Pexels

Co-coordinators :

Benoît Crabbé (LLF – Université Paris Cité)

Joseph Le Roux (LIPN – Université Sorbonne Paris Nord)

Why combine linguistics and AI today?

Since the emergence of large language models (LLMs), language has become a raw material, an interface, and a technical issue for artificial intelligence. This theme aims to answer two questions:

- What can AI teach us about human language?

- What are the linguistic and cognitive limitations of current AI?

It is not just a question of improving the technical performance of models, but of understanding the epistemological, linguistic, social, and ethical issues related to their use.

Scientific questions related to the topic

Understanding language through models

- Using AI as a tool to explore linguistic regularities (morphology, syntax, semantics, discourse).

- Testing hypotheses on the cognitive structuring of language by comparing human and artificial models.

Analyzing models as linguistic objects

- Studying the internal “grammar” of LLMs (e.g., GPT-4) and their learning modes.

- Questioning their ability to handle variation, polyphony, ambiguity, and pragmatics.

Explore the social uses and applications of automated language.

- Generative AI and linguistic inclusion/exclusion.

- Impacts of corpus biases on generated responses.

- Issues of responsibility, interpretability, and appropriation of language technologies.

Methodologies used

- Computational linguistics: formal modeling, extraction of regularities, corpus annotation.

- Comparative experimental analysis: human performance vs. machine performance on specific linguistic tasks.

- Generated vs. natural corpora: comparison of structures, registers, and coherences.

- Critique of training data: linguistic diversity, representativeness, gray areas.

- Interdisciplinarity: intersection of linguistics, computer science, philosophy, and sociology.

Concrete applications and societal issues

- Training: development of intelligent teaching tools that are sensitive to linguistic variation.

- Ethics and regulation: critical evaluation of the use of LLMs in sensitive contexts (education, health, justice).

- Inclusion: exploration of AI solutions for audiences who are distant from standardized language.

- System improvement: integration of linguistic contributions to design more robust, explainable, and fair AI systems.

Partnerships and involvement in the EFL consortium

- Collaboration with teams specializing in formal and computational linguistics (LLF, LaTTiCe, LIPN).

- Dialogue with other work packages: linguistic diversity (to enrich data), cognition (to compare processing models), society (to examine usage).

- Implementation of shared platforms for automatic language processing and generated vs. observed corpora.

In summary

AI offers a unique opportunity to rethink language as a system, a practice, and a technology. The EFL project aims to make it a critical testing ground, shedding light on both the cognitive foundations of language and the ethical contours of its automation.

Read more

Launch of the Metagrammaticography Seminar: Understanding How We Describe Languages

Yvonne Treis and Aimée Lahaussois, researchers at the HTL laboratory (History of Linguistic Theories), are launching a new seminar devoted to metagrammaticography as part of the EFL project

Languages and urban mobility in Latin America: seminar with Juan Carlos Godenzzi

On February 17, 2026, WP6 “Language in its social context” welcomes Juan Carlos Godenzzi (University of Montreal) for a conference on linguistic dynamics in three South American capitals

New HTL-EFL Seminar: History and Epistemology of Linguistic Paradigms (2025-2027)

The Laboratory for the History of Linguistic Theories (HTL) is launching a new research seminar in collaboration with the inIdEx EFL project. Entitled “Paradigms: History and Epistemology,”

2025 Open Science Prize: Qumin Awarded for Computational Linguistics

The Ministry of Higher Education and Research has awarded its 2025 Open Source Research Software Prize to Qumin, developed by Sacha Beniamine (former EFL PhD student, supervised by Olivier Bonami) and Jules Bouton (current EFL PhD student, also supervised by Olivier...